Large language models (LLM)

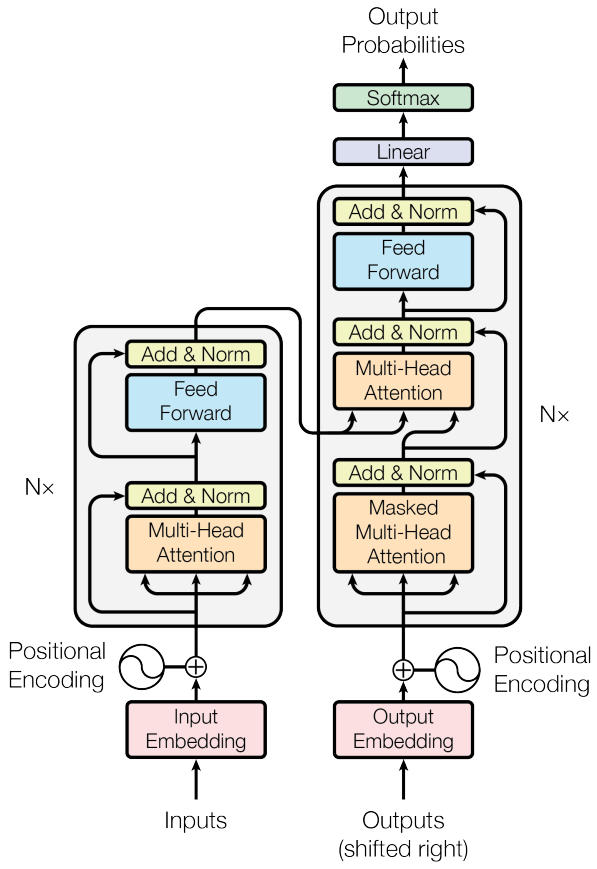

The transformer network, shown in the next image, was introduced in 2017 in the seminal paper Attention is all you need. It is a state-of-the-art deep learning model used for large language models.

The characteristic property is that, for each token (e.g. character, subword, or word), the triple (key, query, value) is computed and used to identify relations between tokens, to be precise, scalar products between one query and multiple keys are used. This mechanism is called attention. Depending on whether a token is allowed to be influenced by tokens later in the stream (e.g. in a translation task, the input is fully known, but the output is generated, so an output token may not be influenced by tokens later in the output stream) a mask is applied to restrict the influence to keys of tokens which are not later in the stream.

The transformer consists of two parts: the encoder and the decoder, left and right in the image, respectively. The encoder transforms text into an internal representation while the decoder optionally uses this internal representation to generate text. Depending on the task, only one of both parts is sufficient. Text classification can be done with only the encoder, where the internal representation is fed into an additional classifier. Text generation can be performed by using only the decoder while an optional user prompt is the start of its input.

If attention, i.e., (key, query, value) triples, from the encoder is used in the decoder, it is called cross-attention and is not masked while attention computed in the decoder (self-attention) is masked, this explains the presence of two different attention mechanisms in the decoder.

Since a transformer has a huge number of parameters, it benefits greatly from being trained on a large amount of data. Therefore, it is common practice to take an encoder or decoder which was pre-trained on large amounts of automatically labeled data and combine them with additional neuronal networks and fine tune them in conjunction to the specific task at hand.

More tasks are possible, like sentiment analysis, see the section below for a demo.